Our research addresses the theoretical foundations and practical applications of computer vision and machine learning for an embodied AI to perceive, predict and interact with the dynamic environment around it. Our interest lies in discovering and proposing the fundamental principles, algorithms and practical implementations for solving high-level visual perception such as:

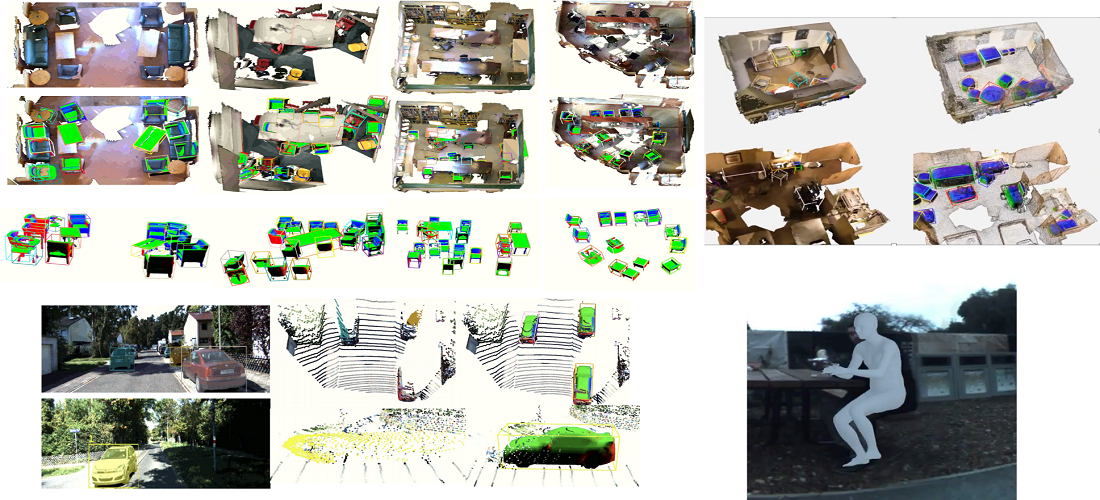

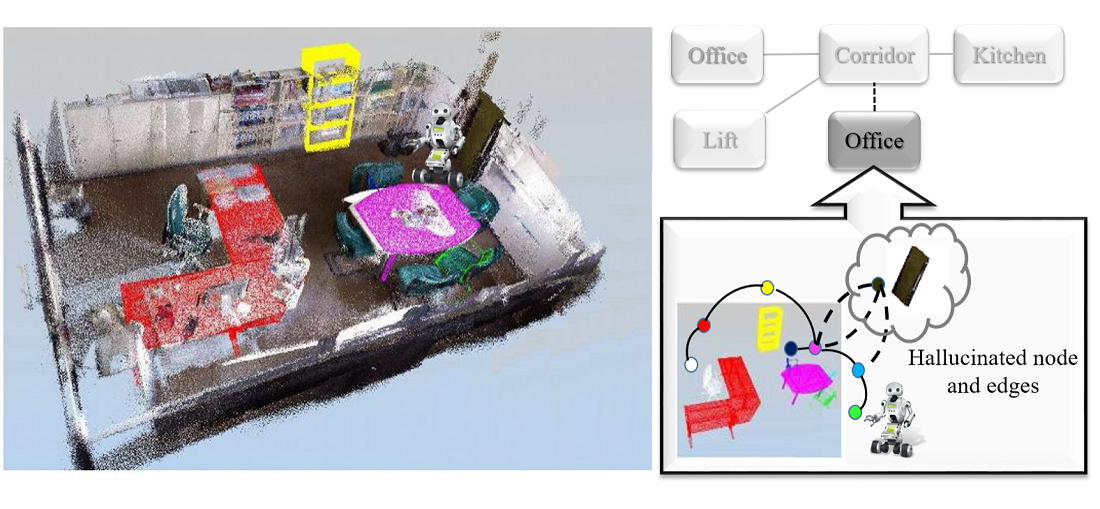

- Object and scene understanding and reconstruction,

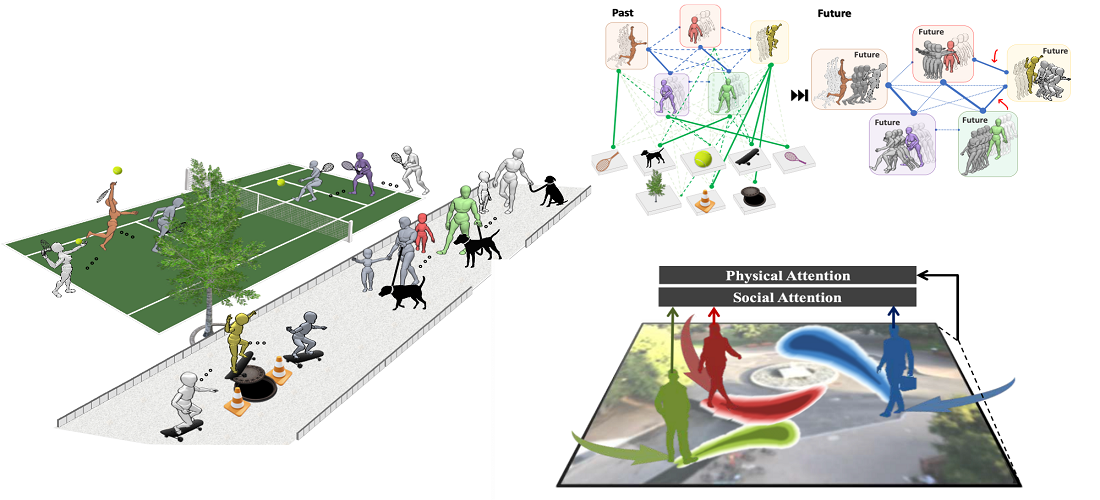

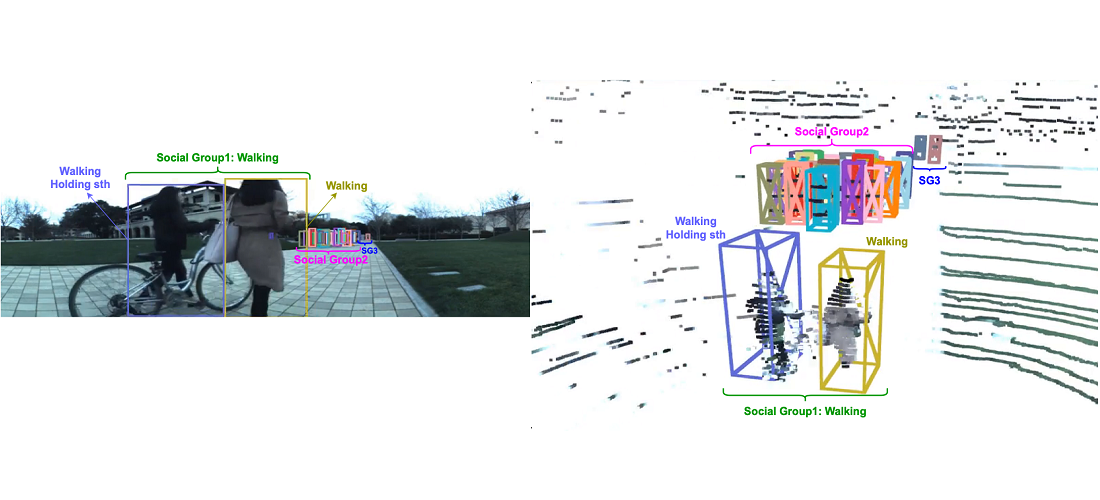

- Predication and reasoning about human motion, activity and behaviour in the presence of physical and social interactions with the environment and the other people, respectively.

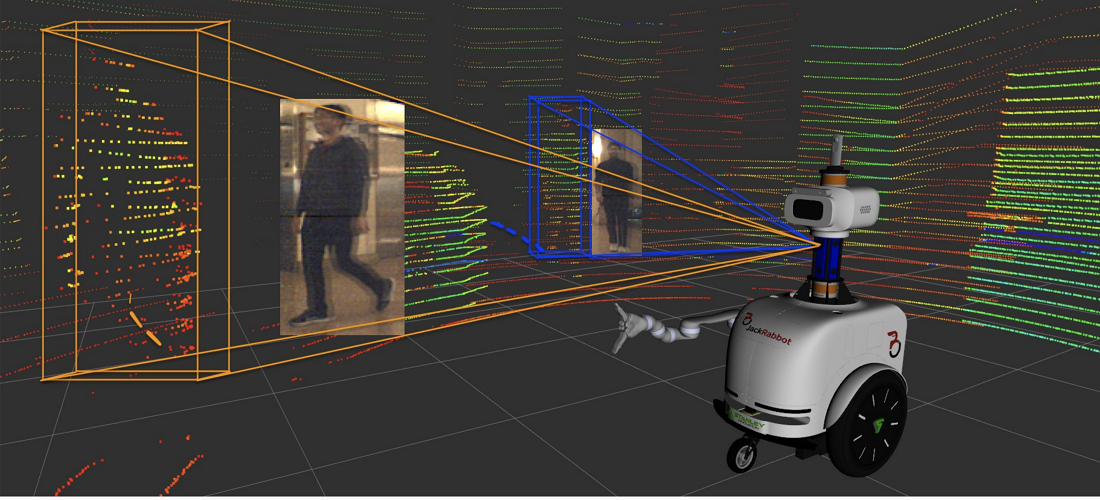

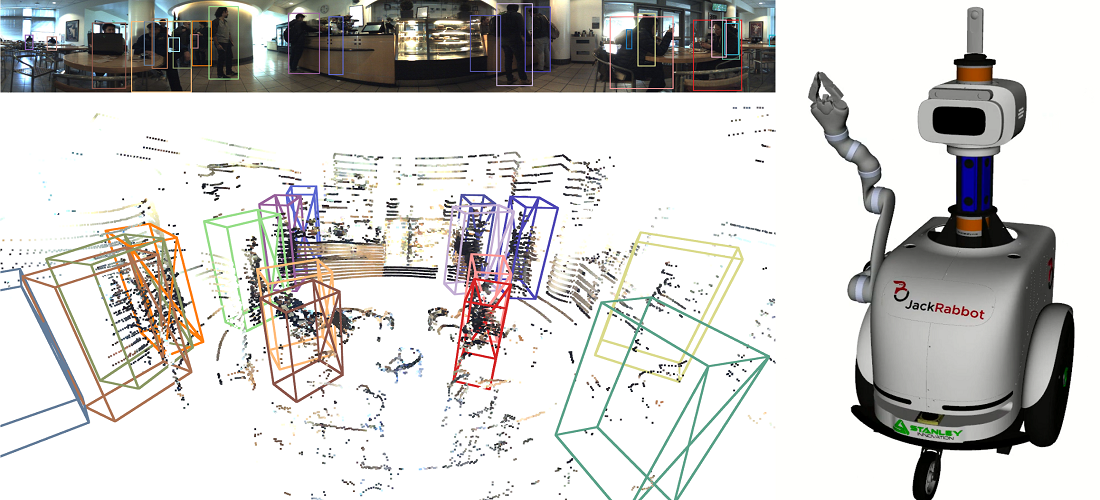

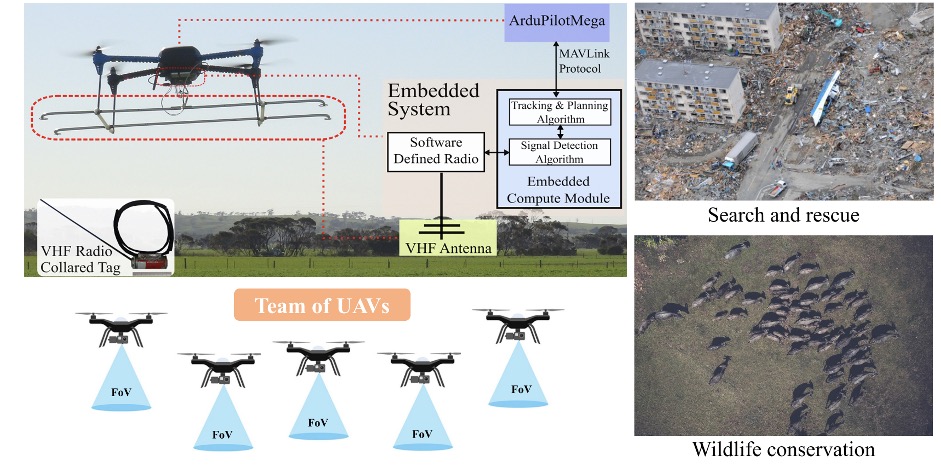

Our overarching aim is to develop an end-to-end perception system for an embodied agent to learn, perceive and act simultaneously through interaction with the dynamic world.

Some of our seminal works:

- Unifying Flow, Stereo and Depth Estimation, TPAMI 2023

- GMFlow: Learning Optical Flow via Global Matching, CVPR 2022

- JRDB: A Dataset and Benchmark of Egocentric Robot Visual Perception of Humans in Built Environments, TPAMI 2021

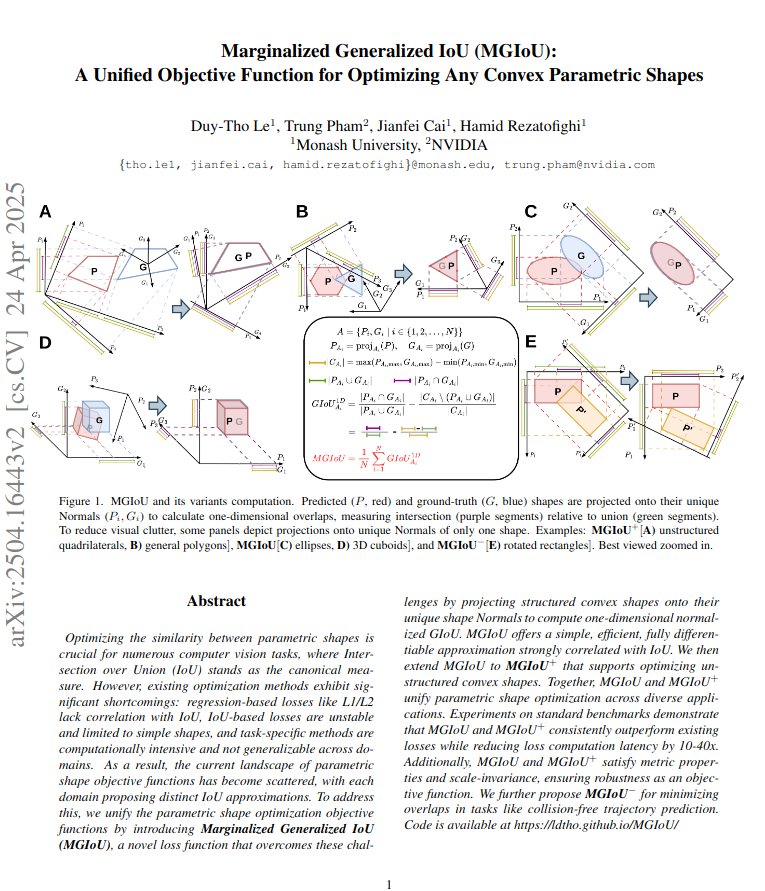

- Generalized Intersection over Union: A Metric and A Loss for Bounding Box Regression, CVPR 2019

- SoPhie: An Attentive GAN for Predicting Paths Compliant to Social and Physical Constraints, CVPR 2019

- Social-BiGAT: Multimodal Trajectory Forecasting using Bicycle-GAN and Graph Attention Networks, NeurIPS 2019

- Online multi-target tracking using recurrent neural networks, AAAI 2017

- Joint probabilistic data association revisited, ICCV 2015

Check our publications for a complete list.